Special thanks to @fred, Albiona Hoti, @quintus, @socrates1024, @jonathan, Teng Yan (Chain of Thought), @gz, and Shelven Zhou (Phala) for feedback and edits.

The previous post reviewed the existing AI Web2 and Web3 ecosystems and where blockchain and trusted verification fit within the Crypto x AI tech stack. In this post, we dive into AI Agent frameworks and the steps toward Agentic AI.

1. AI Agent Overview

Defining AI Agents

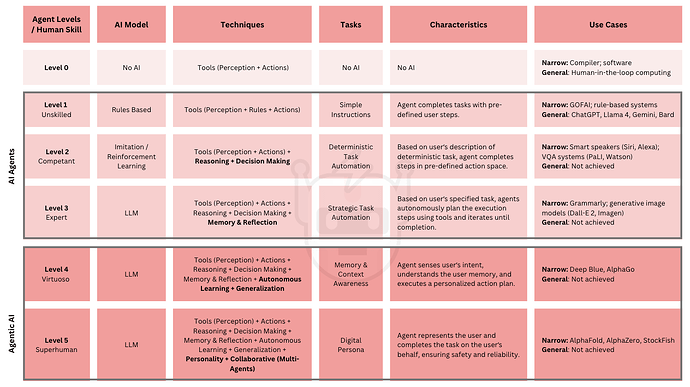

AI agents are software programs designed to complete specific tasks or achieve goals based on preset rules. They automate tasks ranging from organizing emails based on rules (Level 1) to answering questions based on trained data or editing documents based on grammar rules. Beyond rule-based models, AI agents can leverage machine learning models such as reinforcement learning (RL) (Level 2) or large language models (LLMs) (Level 3). At their most advanced, AI agents can reach an expert-level execution, performing at or above the 90th percentile of human capability (Level 4) [1].

Figure 1. Levels of AI Agents [1, 2].

Types of AI Agents

Artificial Intelligence (AI) has evolved dramatically over the past decades, moving from theoretical concepts to practical applications that permeate our daily lives. At the forefront of this evolution are AI agents—software entities designed to perceive their environment, make decisions, and take actions to achieve specific goals. While recent advancements in large language models have brought AI agents into the spotlight, these sophisticated systems have been operating in various industries for years, each with distinct architectures and capabilities tailored to different use cases.

AI agents can be categorized into several distinct types based on their complexity, decision-making processes, and learning capabilities [3]:

-

Simple Reflex Agents. These agents operate within a specified ruleset using only current data. They have no memory and do not interact with other agents. Simple reflex agents are suitable for basic tasks that do not require specific training, but they cannot complete tasks if conditions fall outside their predefined ruleset.

- Examples: A simple trading bot that submits an order when a price limit is reached. HVAC thermostats sense the temperature and activate heating or cooling based on the temperature threshold.

-

Model-based Reflex Agents. These agents employ sophisticated decision models to evaluate possible solutions. They can perceive external data and update their decisions based on internal models to achieve the user’s goal.

- Examples: Social media bots that use LLM models to respond to posts and user interaction. Gaming agents that anticipate player actions and respond strategically.

-

Goal-based Agents. These agents ingest environmental data and evaluate various scenarios to determine optimal solutions. They excel at performing complex tasks that require finding efficient paths to answers.

- Examples: Risk management agents that rebalance the portfolio to optimize a risk-reward metric. Robots can utilize goal-based agents to operate autonomously to perform tasks.

-

Utility-based Agents. These agents solve complex problems by optimizing for a user’s goal using a utility value or function. They analyze different scenarios and select the best way to optimize the desired outcome.

- Examples: Healthcare agents that diagnose patients and monitor treatment to improve patients’ health. Self-driving cars use agents that assess environmental factors like speed, safety, fuel efficiency, and passenger comfort.

-

Learning Agents. These agents improve their performance based on previous results and updated problem generation. They use inputs and feedback mechanisms to adapt their learning modules, creating new training tasks to better achieve their goals. This adaptive capability allows them to operate effectively in new environments.

Learning agents comprise four main modules:

-

Learning. Improves the agent’s knowledge by processing external data sources, environmental cues, and feedback.

-

Critic. Provides feedback to the agent and evaluates whether responses meet performance standards.

-

Performance. Selects actions based on accumulated learning.

-

Problem Generator. Creates proposals for different actions to take.

-

Examples: Recommendation systems use agents to analyze user behaviors and preferences to create personalized content and product offerings. Virtual assistants like Siri and Alexa learn from user interactions to provide customized responses and complete tasks.

-

-

Hierarchical Agents. These agents are composed of multiple levels. Higher-level agents (supervisors) deconstruct complex tasks into simpler ones for lower-level agents (subordinates) to complete. Subordinate agents operate autonomously and submit results to the supervisor. The supervisor collects the results, coordinates subordinates, and ensures overall goal achievement.

- Examples: Orchestration systems use hierarchical agents with a supervisor managing several simple subordinate agents. Manufacturing systems use a supervisor agent to monitor different areas in the workflow, while simple agents perform basic tasks within the workflow.

AI Agent Components

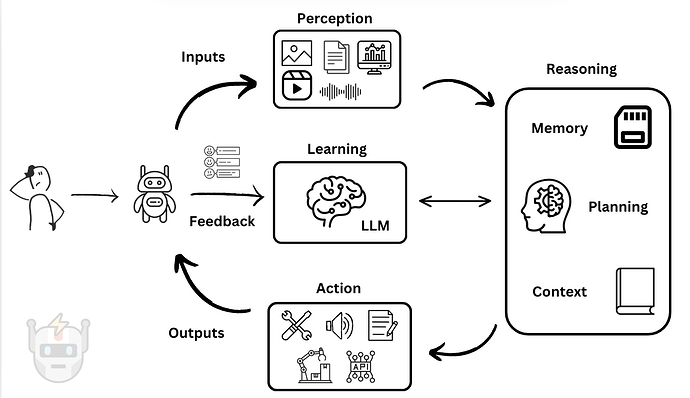

Figure 2. Large language model agent operational modules [4, 7].

AI agents typically consist of four operational modules [4, 5, 6, 7]:

-

Perception. The agent takes new information from its surroundings and extracts relevant features. Agents use data from external sources such as APIs, database queries, and user inputs. Input data can include structured data, natural language, images, audio, or video files, all of which require appropriate preprocessing.

-

Reasoning. The agent leverages its knowledge base and memory to formulate strategies and decide which actions to take based on the current situation, goals, and available actions. Depending on its sophistication, the reasoning module may employ different decision-making models:

- Rule-Based Systems. Provides “if-then” instructions for Simple Reflex agents.

- Probabilistic Models. Uses probability calculations for different outcomes in Model-Based Reflex and Goal-Based agents.

- Machine Learning. Generate predictions or classifications for Goal-Based or Utility agents.

- Deep-learning Models. Utilize neural networks for complex decision-making.

The reasoning module also contains these essential sub-modules:

-

Context Management. Maintains the context of user queries throughout the workflow, including user preferences, current task state, and agent history.

-

Planning and Reasoning. Breaks down complex tasks into simpler steps using methods like Chain-of-Thought or tree-based searching.

-

Memory Systems. Stores plans and decisions in either short-term or long-term memory for persistence, allowing agents to adapt and learn dynamically.

-

Action: The agent employs tools to execute actions necessary to implement its strategy. Actions can be physical, digital, visual, or auditory.

-

Tool Integration. Incorporates external tools and APIs, enabling access to external data sources, API calls, and complex calculations.

-

Output Generation. Produces outputs in various forms, including natural language responses, API calls, tool use, status messages, error reporting, and numerical results.

-

Safety and Monitoring. Implements input/output validation, logging capabilities, monitoring systems, and error-handling mechanisms for production environments.

-

-

Learning: The agent observes the results of its actions and adapts to improve its reasoning. Successful actions reinforce and strengthen its models and decision-making processes.

-

Feedback Mechanisms. Enhance the agent’s reasoning and accuracy through feedback from other AI agents or humans (Human-in-the-Loop or HITL).

-

Learning Models. Employ various approaches depending on the use case:

-

Supervised Learning. The model learns from externally labeled examples.

-

Self-Supervised Learning. The model learns from self-labeled examples.

-

Unsupervised Learning. The model discovers patterns in unlabeled data.

-

Reinforcement Learning. The model uses iterative trial-and-error feedback to optimize solutions.

-

-

2. AI Verification & Privacy Layer

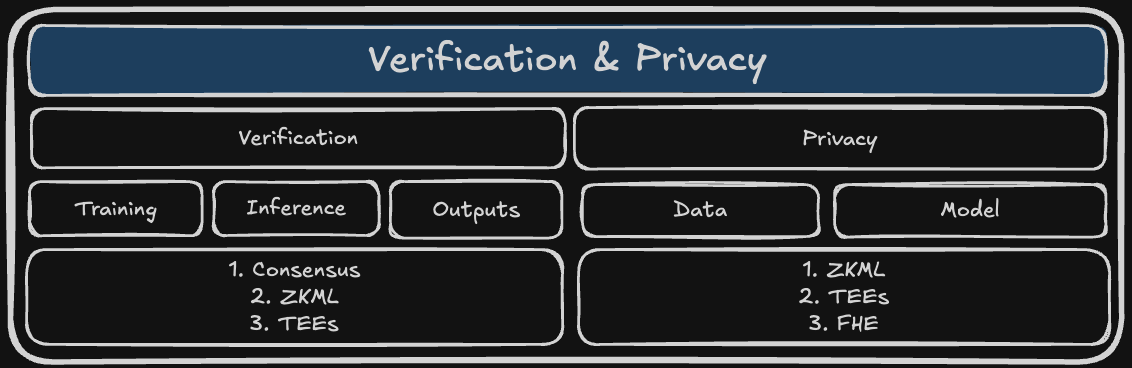

The Verification and Privacy layer represents a critical component in the Web3 AI technology stack. It ensures that AI models operate as promised, enhances trust, and ensures adherence to regulatory requirements.

Figure 3. AI verification and privacy layer.

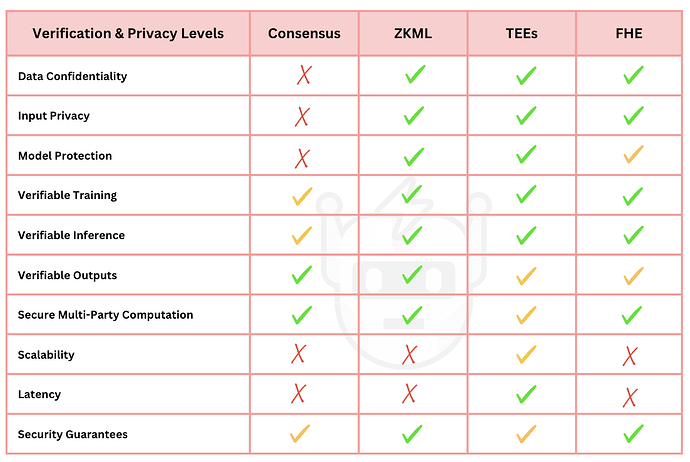

The primary components of verification include:

-

Verifiable Training. AI models can employ cryptographic techniques to make their training process verifiable without exposing sensitive data. This verification proves that the training followed specified protocols and used the claimed data while preserving privacy guarantees. Verifiable training is valuable for federated learning scenarios, where multiple parties collaboratively train a model without sharing raw data, and for demonstrating regulatory compliance in sensitive domains.

-

Fully Homomorphic Encryption (FHE) enables privacy-preserving training where data owners do not expose their raw data. However, performance limitations make it impractical for large models.

-

Trusted Execution Environments (TEEs) isolate training computation in secure hardware enclaves that can attest that specific code ran on specific data. TEEs are exposed to potential side-channel vulnerabilities.

-

Zero-Knowledge Machine Learning (ZKML) provides cryptographic proofs that training followed specific protocols without revealing the data or model parameters. ZK proofs are still facing scalability challenges due to their computational intensity.

-

Consensus mechanisms allow multiple parties to agree on training data and model parameters but is unable to verify computational correctness on its own.

-

-

Verifiable Inference. Verifiable inference confirms that the original, unmodified model was used and that computations were performed as expected. This provides guarantees about reproducibility, ensuring different parties would generate identical outputs given the same inputs. For applications deployed on edge devices, where hardware operates outside direct control, verifiable inference helps detect tampering and maintain trust in model execution.

-

FHE provides strong confidentiality guarantees that neither the model owner nor inference provider can see input data. However, FHE by itself does not prove that the model computation was complete or correct.

-

TEEs provide strong verification guarantees through hardware-based isolation and attestation for model execution. TEEs require a trust assumption in the hardware manufacturer and operator.

-

ZKML creates succinct proofs that the inference was done correctly according to the specified model without revealing the model parameters or input data.

-

Consensus can verify that multiple parties agree on which model was used but doesn’t verify the inference computation.

-

-

Verifiable Outputs. This ensures that model outputs are auditable, reproducible, and can be validated against established criteria. Many applications require strict AI output verification for accountability and regulatory reasons, including healthcare (where outputs affect patient care), financial services (for fair lending decisions), legal services (for reliable documentation analysis), autonomous vehicles (for safety-critical decisions), and compliance monitoring systems. Verifiability creates an evidence trail that connects outputs to the underlying model and data.

-

FHE ensures output privacy but does not verify its correctness.

-

TEEs can attest that the output came from a specific model running within the secure enclave. It does not verify the correctness of the model’s logic or outputs.

-

ZKML can prove specific properties of the outputs without revealing the outputs. It also enables verification of compliance with regulatory requirements.

-

Consensus can establish agreement of outputs across multiple parties and can create a tamper-proof record of the model outputs. Consensus does not verify the correctness of the outputs.

-

Privacy Components

The key components of privacy include:

-

Data Confidentiality. The data is protected from unauthorized access and disclosure and is only accessible to authorized parties.

-

FHE is the strongest guarantee of data confidentiality, as the data stays encrypted through the computation process.

-

TEEs provide strong levels of data confidentiality guarantees. TEEs use a secure enclave to protect data during processing and use memory encryption to prevent unauthorized access.

-

ZKML, when run locally, provides strong levels of data confidentiality guarantees with computational verification without revealing the input data. ZKML’s high computation resource requirements make production use highly impractical for real-world use.

-

Consensus mechanisms can not prove data confidentiality.

-

-

Input Privacy. Encrypted data that is being entered into or provided to a system remains private and is securely accessed.

-

FHE offers the strongest input privacy guarantees since the inputs are never revealed and computations are performed on the encrypted data.

-

TEEs offer strong guarantees as they can attest that the inputs have been handled securely and remain encrypted until decryption in the secure enclave.

-

When run locally, ZKML offers strong guarantees, as users can provide the model with encrypted inputs without revealing the actual data. ZKML proofs verify data properties without disclosing the raw data.

-

Consensus provides limited input privacy as the inputs are visible to network participants unless additional privacy security is used.

-

-

Model Privacy. Protection of an AI model’s architecture, weights, parameters, and intellectual property from theft, reverse engineering, or unauthorized access. AI model privacy during both training and inference safeguards intellectual property, enhances trust, reduces the risk of malicious exploitation, ensures regulatory compliance, and supports model governance and monetization.

-

FHE offers strong model privacy guarantees since the model stays encrypted during use. However, it is computationally intensive and lacks production performance.

-

TEEs provide strong model privacy guarantees as the model is secured in the hardware enclave. Remote attestation validates the environment.

-

ZKML provides strong model privacy guarantees since it uses zero-knowledge proofs to verify the model’s output while ensuring that the data, model’s architecture, and weights remain confidential.

-

Consensus provides limited model privacy as the model is exposed on chain, and encrypted models reduce verifiability.

-

Applications that particularly require privacy protections include those handling healthcare data, financial information, personal data, and federated learning systems.

Figure 4. Verification and privacy tradeoffs for AI. [6]

Web3 Solutions for Trust

Web3 projects offer several solutions that address fundamental trust assumptions:

-

Consensus Mechanisms. Similar to blockchain validation, nodes in a network can provide trustless verification of AI model outputs and agent actions.

-

Zero-Knowledge Machine Learning (ZKML). ZKML enables the trustless verification of AI model properties without revealing model details. It can prove that a model was trained on a specific dataset, that outputs originated from the claimed AI model, and that model outputs remain untampered.

-

Trusted Execution Environments (TEEs). AI models operating within TEEs provide trusted verification via remote attestation while maintaining privacy. TEEs ensure that AI models or agents do not expose data to external parties.

-

Fully Homomorphic Encryption (FHE). FHE allows AI models to process encrypted inputs without accessing raw data. This capability enables AI to operate on sensitive information while adhering to privacy laws such as GDPR, HIPAA, and CCPA.

Agent Trust Mechanisms

For AI agents specifically, trust can be established through blockchain-specific solutions:

-

Smart Contracts. Smart contracts are used by AI agents for executing trades, updating information via oracles, and ensuring agreements are met as escrow contracts. They are transparent and verifiable, can enforce constraints on agent behavior, and create permanent, auditable records of agent actions. [7]

-

Tokenized Reputation Systems. AI agents can build verifiable track records of their actions through token systems that create economic incentives, rewarding honest behavior and penalizing negative conduct.

Human Verification

Agent verification can also be conducted manually through Human-in-the-Loop (HITL) approaches:

-

Human Review Panels. For AI models in sensitive industries such as medicine and law, human experts can provide feedback and evaluate AI agent outputs.

-

Crowdsourced Verification. User feedback can help establish an agent’s verifiable track record and improve model outputs over time.

3. Agentic AI Overview

Defining Agentic AI Agents

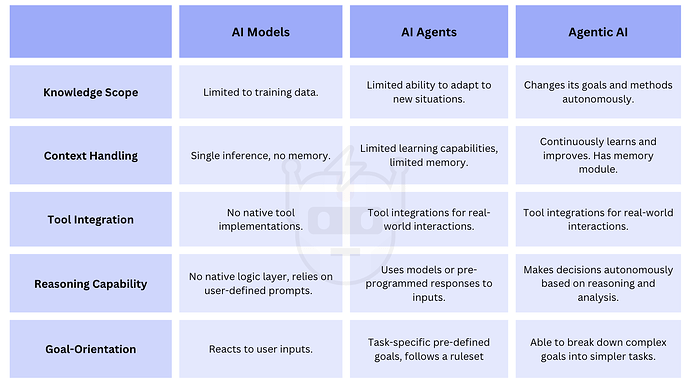

Agentic AI prioritizes autonomy for AI programs, enabling them to make decisions, take actions, and analyze outcomes to adjust future decision-making. Unlike simple AI agents, agentic AI agents utilize memory and reasoning modules to process and adapt to external information without human interaction. They can create subtasks to complete complex assignments and update their actions based on results.

Figure 5. Differences between AI models, AI agents, and Agentic AI [8].

Agentic AI Components

Agentic AI comprises the same four modules as standard AI agents. However, improvements in the following areas are crucial for achieving agent autonomy [9, 10, 11, 12]:

-

Memory. Persistent short-term memory enables recall and improved learning. In multi-agent systems, memory allows agents to share and collectively update a knowledge base, enhancing collaboration and efficiency.

-

Reasoning Framework. Reasoning frameworks provide AI agents with structured thinking processes for solving complex tasks. The most commonly used reasoning frameworks include:

-

Chain-of-Thought (CoT). Enables AI models to generate intermediate reasoning steps before providing a final answer. CoT helps models structure their thinking and avoid simple errors during problem-solving.

-

Reasoning + Acting (ReAct). Combines reasoning with action-taking in an iterative process. The agent follows a workflow of reason → action → observe → reason → action. ReAct is particularly useful for tasks requiring information gathering or complex multi-step procedures.

-

Tree-of-thoughts (ToT). Extends Chain-of-Thought reasoning by exploring multiple reasoning paths in parallel. This allows AI agents to consider several alternatives before committing to a solution, making it useful for problems with multiple potential approaches.

-

Zero-Shot Chain-of-Thought (Zero-Shot-CoT). Implements a Chain-of-Thought reasoning process without explicit examples, typically by adding a simple prompt such as "Let’s solve this step by step.”

-

Benefits of Agentic AI

Agentic AI offers several advantages over standard AI agents:

-

Increased Efficiency. Agentic AI enhances AI-automated workflows by embedding decision-making and problem-solving capabilities. Rather than relying on human input, agentic AI can respond autonomously to changing environments, significantly improving workflow efficiency.

-

Improved Employee Performance. By processing real-time data and understanding problems comprehensively, agentic AI can assist employees by completing subtasks and adjusting plans based on changing circumstances.

-

Accelerated Workflow Design. Agentic AI can generate workflow designs based on user intentions, industry best practices, templates, and learnings from previous designs.

-

Customized User Engagement. Rather than responding via generalized workflows, agentic AI can address user problems with customized solutions based on an analysis of user data, current situations, and available solution options.

Agentic AI Applications

Agentic AI can operate workflows across various domains and ecosystems:

-

Virtual Assistants. Agentic AI can replicate and enhance workflows to increase user productivity:

-

Task managers. Agents can manage schedules, automate workflows, and create new tools using no-code platforms.

-

Research assistants. Agents can autonomously conduct research, synthesize data analysis, and generate comprehensive reports.

-

Content creation. Agents can produce, edit, and distribute content across social media platforms based on user intentions.

-

-

Healthcare. Agentic AI assists medical professionals through:

-

Enhanced diagnostics and treatment. AI agents analyze patient data, diagnostic images, and genetic information to help medical professionals make accurate diagnoses.

-

Improved patient experience. Agents continuously monitor patients, improving care quality, enabling early problem detection, and enhancing adherence to treatment plans.

-

Efficient healthcare operations. Agents streamline healthcare workflows by automating patient scheduling, managing electronic health records, and processing billing information.

-

-

Financial Services. AI agents can monitor portfolios continuously, create personalized investment plans, and execute transactions:

-

Portfolio management. Agents monitor market conditions, rebalance portfolios, and execute trades with minimal human interaction.

-

Risk managers. Specialized agents continuously monitor on-chain and market activity, assess risk factors, and execute risk-reduction strategies.

-

Personalized financial advisors. Agents analyze users’ financial histories, risk tolerances, and goals to create customized investment plans and execute strategies across traditional and crypto markets.

-

-

Robotics. AI agents enhance robot capabilities in several ways:

-

Autonomous navigation. AI agents assist robots in navigating complex environments by making real-time decisions.

-

Task automation. AI agents automate repetitive tasks and adapt to changes in production processes, optimizing workflows and efficiency.

-

Human-robot interaction. Agents facilitate communication and collaboration between humans and robots by comprehending natural language commands and human intentions.

-

Data analysis. AI agents analyze vast amounts of data and make informed decisions, assisting robots in specialized applications such as drug manufacturing and complex surgical procedures.

-

4. Whatʻs Next For AI Agents

Looking ahead, several key developments will shape how AI agents evolve within this emerging infrastructure landscape. These advancements will determine not only what AI agents can do but how they’ll integrate with existing systems, collaborate, and earn user trust through verifiable security guarantees.

Currently, AI agents face several problems that undermine user trust.

-

Insecure Account Key Management. To access traditional applications like X or Instagram, AI agents require access to user API keys, often stored as environmental variables, or the use of an X auth_token cookie. This exposes the user’s account to potential exploits and could lead to the user’s account being banned.

- OAuth 3.0 aims to solve this issue by providing improved account security guarantees via customized account delegation.

-

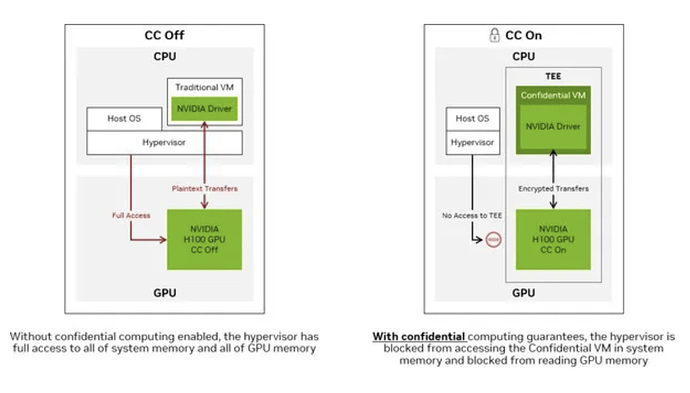

Limited Large-Scale Confidential AI Training. As mentioned previously, the verification and privacy layer is an important piece of the decentralized AI tech stack, providing a fundamental trust assumption for AI models and agents. CPU TEEs are limited to smaller LLMs and are insufficient for training large models.

- While still a new technology, GPU TEEs are a scalable solution for confidential, high-performance training for large AI models.

-

Lack of Interoperability. As AI systems proliferate and grow more specialized, interoperability between different AI systems, models, agents, and tools becomes more crucial. This challenge manifests as incompatible data formats, inconsistent communication protocols, and varying semantic interpretations across AI systems.

- Anthropic’s Model Context Protocol (MCP) represents a significant step toward solving these interoperability challenges by providing a standardized framework for AI models to interact with external tools and data sources.

1. OAuth 3.0

OAuth 3.0 is a proposed extension of the OAuth authorization framework that addresses the limitations of the current OAuth 2.0 system. Specifically, OAuth 3.0 aims to address complex delegation scenarios for applications requiring enhanced security guarantees. OAuth 3.0 allows users to create limited-scope tokens for safer delegation [13].

How OAuth 3.0 Works

OAuth 3.0 employs a proxy-based architecture that leverages trusted execution environments (TEEs) as a confidential compute proxy. The TEE manages all authentication and authorization processes between users, client applications (including AI agents), and resource servers—services with protected data and functionality.

While OAuth 2.0 uses tokens that are exposed to client applications, OAuth 3.0 keeps credentials and tokens secure within the TEE. This allows applications to act on behalf of users without directly handling sensitive credentials. Throughout the process, credentials never leave the TEE proxy, significantly reducing the risk of credential misuse or theft.

OAuth 3.0 Workflow

The authorization flow for OAuth 3.0 follows these steps:

-

The user grants an application access to their data on a particular service.

-

The user authenticates directly with the proxy using secure methods, such as a passkey.

-

The user specifies permission policies they want to grant—including time limits, usage limits, and account encumbrance.

-

The proxy securely stores these permissions and obtains the necessary tokens from the resource server.

-

When the application needs access to the service, it sends a request to the proxy.

-

The proxy validates the application request against the user permission policies.

-

If approved, the proxy executes the request using the stored credentials and returns only the authorized results.

Key Innovations

OAuth 3.0 features several innovations using TEEs that bridge Web2 and Web3:

-

Advanced Delegation Models. OAuth 3.0 supports sophisticated delegation policies, including:

-

Time-bound. Specifies the period when delegation is available.

-

Use-bound. Limits delegation to a specific number of uses.

-

Conditional. Implements if-then rules that dictate delegation availability.

-

Hierarchical. Enables a primary agent to delegate specific subtasks to specialist agents.

-

-

Account Encumbrance. Users can create “credible commitments” by giving controlled access to their account to the delegate. Account encumbrance provides several safety guarantees:

-

Ensures AI agents comply with regulatory requirements.

-

Guarantees human confirmation for specific sensitive actions.

-

Allows service providers to enforce operational constraints and limitations.

-

-

Zero-Knowledge TLS (ZKTLS). Provides cryptographic proofs that validate the proxy’s behavior without exposing sensitive data.

-

Expressive Policy Language. Enables users to provide detailed specifications about permitted actions under specific circumstances to the proxy.

2. TEE GPUs

Nvidiaʻs H100 introduced a TEE-enabled GPU designed to provide confidential computing security for high-performance AI applications.

Figure 6 (a, b). Nvidia H100 architecture for Confidential Computing [14].

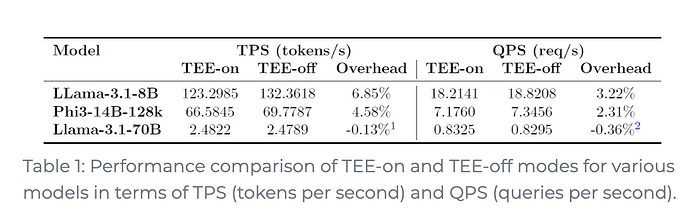

Nvidia H100 GPU Performance

Phala Network tested the performance of Nvidiaʻs H100 (TEE GPU) against AMDʻs SEV-SNP (TEE CPU) for inference on three LLMs: Meta-Llama-3.1-8B-Instruct, Microsoft-Phi-3-14B-128k-Instruct, and Meta-Llama-3.1-70B-Instruct [15]. Their results showed that for LLM inference, TEE-GPUs add a minimal overhead - just 6.85% in terms of tokens per second (TPS) and 3.22% in terms of queries per second (QPS).

Figure 7. Phala TEE GPU vs TEE CPU performance results [15].

The main conclusions from their experiment were:

-

TEE GPUs can effectively scale large-scale LLM inference tasks as input sizes and model complexities grow. For large models, overhead is negligible.

-

Specifically, overhead decreases as a function of:

- Model size

- Input size

- Total tokens (input + output size)

Considerations for AI Applications

Figure 8. Pros and cons of GPU vs CPU TEEs.

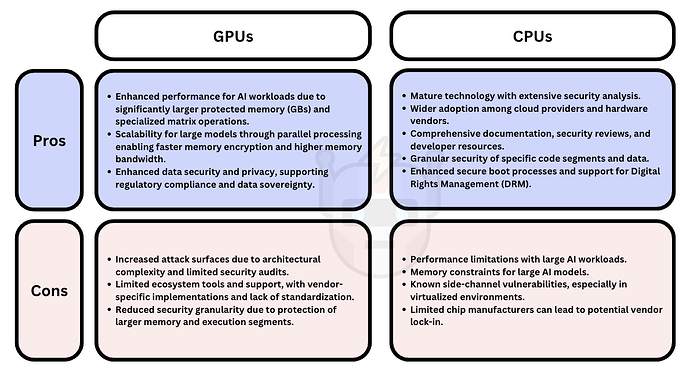

When deciding between CPU and GPU TEEs for AI applications, organizations should consider:

-

Inference-only systems. For small models where latency isn’t critical, CPU TEEs are sufficient. Larger models or high-throughput applications should use GPU TEEs for better performance.

-

Training systems. GPU TEEs are better suited for the higher computational demands of training large models or models that utilize large sensitive datasets.

-

Multi-tenant environments. CPU TEEs offer stronger isolation between tenants, while GPU TEEs provide better resource utilization.

-

Regulated industries requiring certification. The maturity and certification status of CPU TEEs are currently stronger than the newer GPU TEEs.

3. Interoperability Standards

AI interoperability challenges exist at several distinct levels.

-

Technical Interoperability. AI systems use different data formats, communication protocols, and inference paradigms. For example, an AI agent that uses Anthropic’s Claude may struggle to collaborate effectively with another agent using OpenAI’s GPT-4. These technical incompatibilities create AI system silos and significantly increase development overhead for cross-platform integration.

-

Model Semantic Interoperability. Different language models often have different semantic understandings of concepts, resulting in:

-

Varied interpretations of identical instructions or queries.

-

Loss of critical context when information is transferred between models.

-

Inconsistent reasoning patterns based on different internal knowledge graphs and representations.

These semantic discrepancies frequently cause models to misinterpret data, especially when dealing with specialized domains, ambiguous terms, or complex relationships, resulting in unreliable outputs.

-

-

AI Agent Interoperability. For effective collaboration, AI agents require:

-

Aligned goals and compatible planning methodologies.

-

Clear awareness of other agents’capabilities and limitations.

-

Standardized communication protocols for sharing context and state.

Without these elements, agent teams cannot coordinate effectively, leading to redundant work, conflicting actions, and inability to solve complex problems requiring specialized expertise from multiple agents.

-

The consequences of insufficient interoperability include vendor lock-in, resource-intensive custom integration development, and severely limited collaboration potential between AI systems.

Current Solutions

-

Standardized APIs and Interface Layers. Anthropic released its Model Context Protocol (MCP) as a framework for connecting AI models to external tools and data sources. MCP has gained significant adoption across several platforms and services, including integration with:

-

Microsoft Azure AI services [16].

-

Amazon AWS (through Bedrock).

-

OpenAI ChatGPT and Agent SDK [17].

-

Various developer frameworks including LangChain.

Significant implementations have also appeared in specialized applications including Stripe and Block (fintech), Perplexity (search), and Cursor (code editor).

-

-

Unified Communication Protocols. OPAL (Open Protocol for Agent Language) represents an emerging standard for agent-to-agent communication. It provides structured formats for capability declaration, task representation, and inter-agent assistance requests.

In the open-source LLM space, Hugging Face Transformers library has established de facto standards for model architecture, weights, and tokenization, enabling greater cross-compatibility among open models.

-

Data Exchange Formats. GGUF (GPT-Generated Unified Format) has emerged ats the leading standard for packaging and distributing open-source language models. By allowing different inference engines to work with the same model files, GGUF addresses a critical interoperability challenge at the deployment level.

Additionally, structured JSON-based solutions are being developed to standardize prompt formats, user inputs, and response structures across different LLMs, creating more consistency in model interactions.

Model Context Protocol Overview

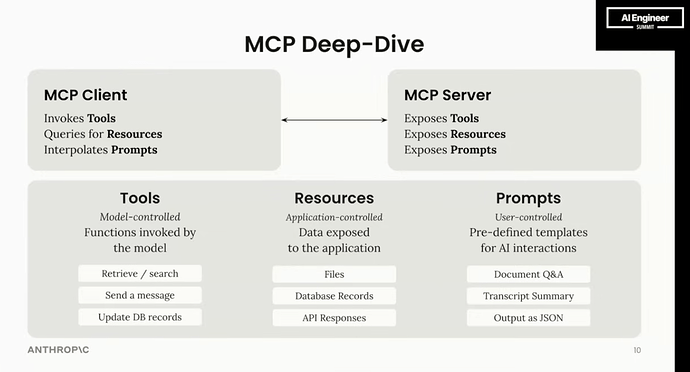

Figure 9. Anthropic MCP Overview. [18]

The Model Context Protocol (MCP) addresses core AI interoperability challenges through a structured framework that defines:

-

How context is provided to the model

-

How tools and capabilities are described to model

-

How the model should interpret and use contextual information

-

How models should format responses when working with external systems

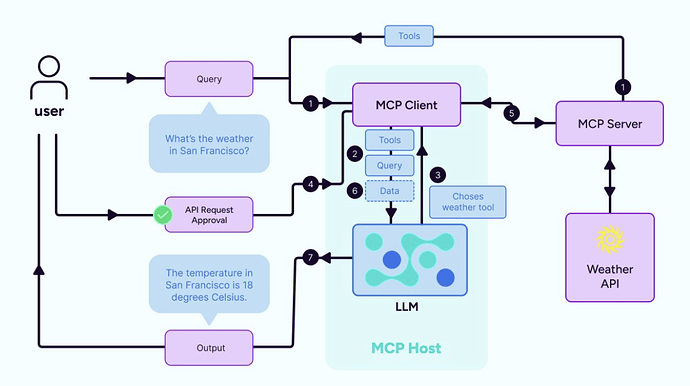

Architecture

MCP employs a modular framework that separates the AI application (client) from the data sources or tools (servers). This separation enables flexibility, scalability, and security while maintaining consistent communication standards.

Figure 10. Anthropic MCP Architecture.

The architecture consists of three main layers:

-

MCP Hosts (Clients). Clients are AI-powered applications that use MCP to access external data or perform actions. Key characteristics include:

-

Connect to multiple MCP Servers simultaneously.

-

Approve and manage which servers they interact with, ensuring controlled access.

-

Typically embed an LLM that utilizes data or tools provided by servers.

-

Process responses and integrate retrieved information into their operations.

-

-

MCP Servers. Servers are lightweight adapters between the raw data / tools and the AI Client. Each Server implements the MCP standard to provide:

-

Resources. Structured data (files, database records, web content).

-

Tools. Executable functions (API calls, file operations) that the model can invoke.

-

Prompts. Templates or instructions for server interaction.

Servers communicate their capabilities to the MCP Host, handle requests, and return responses in standardized formats. The current version runs locally, though remote capabilities are in development, with security controls to limit data exposure.

-

-

Transport and Communication Layer. This layer defines how the MCP Hosts and Servers exchange messages. MCP uses JSON-RPC 2.0 for messaging, supporting:

-

Stdio (Standard Input/Output). Used for local communication between a client and server on the same machine.

-

HTTP with Server-Sent Events (SSE). Designed for remote communication, allowing servers to push updates to clients over a persistent connection.

Security features include:

-

Servers maintain control of their resources.

-

Clients must explicitly approve connections.

-

No API keys are shared with LLM providers, enhancing privacy.

-

Interoperability Solutions

MCP doesn’t solve just one interoperability problem but creates a comprehensive framework addressing technical, semantic, and agent collaboration challenges.

1. Technical Interoperability. MCP addresses technical interoperability by creating standardized interfaces and communication patterns that allow different systems to connect regardless of their underlying implementation details.

-

Standardized Communication. Creates consistent formats for describing tools and handling inputs/outputs between AI models and external systems.

-

API Integration. Provides clear guidelines for presenting API specifications to models and standardizes how models interpret API documentation.

-

Data Format Handling. Offers guidance on describing various data structures to models and standardizes data formatting for external systems.

-

Bidirectional Data Flow. Enables models to both query and update external systems, reducing integration complexity.

2. Semantic Interoperability. MCP helps AI models understand the purpose and appropriate use of tools, not just their technical specifications:

-

Conceptual Alignment. Provides guidelines on what each tool does, when to use it, why it is appropriate, and how to interpret the results.

-

Knowledge Representation. Standardizes how tool capabilities are represented to models, creating frameworks to translate between different semantic contexts.

-

Context Preservation. Emphasizes maintaining relevant context throughout tool interactions, ensuring important information is not lost between operations.

3. AI Agent Interoperability. MCP creates an operational layer for multi-agent workflows:

-

Agent-to-Tool Connectivity. Establishes standard patterns for agents to interact with external tools and data sources.

-

Shared Context. Allows multiple agents to access the same real-time context, aligning their actions and reducing conflicts.

-

Adaptable Framework. The framework supports different AI models through consistent patterns removing vendor lock-in, increasing collaboration, and agent specialization across ecosystems.

-

Actionable Communication. Enables bidirectional communication where agents can trigger actions or delegate tasks, enhancing coordination.

By abstracting these different aspects of interoperability, MCP helps reduce fragmentation in AI systems development and creates more consistent experiences across tools and platforms.

5. Conclusion

The evolution of AI agents from simple rule-based systems to autonomous agentic entities represents a significant technological shift that demands equally sophisticated security and privacy infrastructure. Three key developments are shaping this evolution: enhanced authorization frameworks like OAuth 3.0, high-performance secure computing through TEE-enabled GPUs, and comprehensive interoperability solutions such as Anthropic’s Model Context Protocol (MCP).

Authorization frameworks like OAuth 3.0 address the complex security requirements unique to AI systems, particularly concerning delegation, credential management, and fine-grained permissions. Simultaneously, advancements in TEE technologies — especially GPU-based implementations — are creating secure computing environments that maintain high performance, addressing the computational demands of sophisticated AI models.

The Model Context Protocol represents a crucial third pillar in this infrastructure, solving the fundamental challenge of interoperability across AI systems. By providing standardized interfaces for AI models to interact with external tools and data sources, MCP creates a common language that enables different AI systems to work together seamlessly. This standardization is essential for the development of specialized agent ecosystems where multiple AI agents with different capabilities can collaborate effectively. MCP’s comprehensive approach—addressing technical formats, semantic understanding, and agent coordination—provides the foundation for truly collaborative multi-agent systems that can tackle complex problems requiring diverse AI capabilities.

This convergence of enhanced authorization frameworks and high-performance secure computing creates a foundational infrastructure where AI agents can securely operate with delegated authority while maintaining strong privacy guarantees. The security- performance balance achieved through TEE-enabled GPUs is particularly crucial, as the minimal performance overhead (less than 7% for token processing) makes secure AI deployment economically viable at scale.

As AI agents transition from controlled, limited-scope applications to more autonomous agentic systems operating across multiple domains, these security mechanisms will prove essential. Future agentic AI systems will need to navigate complex environments, handle increasingly sensitive data, and operate with greater autonomy — all while maintaining user trust and regulatory compliance. The technologies discussed in this report provide the technical foundation for this transition, enabling AI agents to operate securely across application boundaries while respecting privacy constraints and delegation limitations.

The verification and privacy layer elements explored earlier — including verifiable training, inference, and outputs — complement these authorization frameworks, secure execution environments, and interoperability standards to create a comprehensive trust architecture for next-generation AI systems. Together, they enable the development of AI agents that can earn user trust through verifiable security guarantees while delivering the performance necessary for complex reasoning, autonomous operation, and effective collaboration across AI ecosystems.

In our next article, we will deep dive into the agentic application technology stack, examining how these security, privacy, and interoperability fundamentals integrate with the components that enable sophisticated AI reasoning, multi-agent collaboration, and seamless integration with existing systems.

6. References

- Y. Huang. Levels of AI Agents: from Rules to Large Language Models. arXiv 2405.06643.

- M. Morris, J. Sohl-Dickstein, et al. Position: Levels of AGI for Operationalizing Progress on the Path to AGI. arXiv 2311.02462.

- AWS. What are AI Agents?

- Dawn Song. CS 294 - Large Language Model Agents. Fall 2024.

- Cole Stryker. IBM: What are the components of AI agents?. March 10, 2025.

- J. Barker. What is ZKML? Explanation and Use Cases. January 28, 2025.

- K. Lad, M. Akber Dewan, F. Lin. Trust Management for Multi-Agent Systems Using Smart Contracts. 2020.

- E. Lisowski. AI Agents vs Agentic AI: Whatʻs the Difference and Why Does It Matter?. December 18, 2024.

- Z. Xi, W. Chen, et al. The Rise and Potential of Large Language Model-Based Agents: A Survey. arXiv 2309.07864.

- Rituals Substack. AI Agent: Research and Applications. November 19, 2024.

- Anna Gutowska, IBM: What are AI agents?. July 3, 2024.

- J. Wiesinger, P. Marlow, and V. Vuskovic. Google AI Agent FAQ. September 2024.

- Github, OAuth 3.0 TEE Proxy.

- G. Dhanuskodi, et al. Creating the First Confidential GPUs. January 8, 2024.

- J. Zhu, H. Yin, P. Deng, A. Almeida, S. Zhou, Confidential Computing on Nvidia Hopper GPUs: A Performance Benchmark Study. November 5, 2024.

- M. Kasanmascheff, Microsoft Adds Anthropic’s Model Context Protocol to Azure AI and Aligns with Open Agent Ecosystem. March 24, 2025.

- K. Wiggers, OpenAI Adopts Rival Anthropic’s Standard for Connecting AI Models to Data. March 26, 2025.

- AI Engineer Summit 2025. Building Agents with Model Context Protocol - Full Workshop with Mahesh Murag of Anthropic.