In our previous post, we explored the History of Application Design. In Part 1 of our second Agentic AI series post, we examine the current Web2 AI landscape and its key trends, platforms, and technologies. In Part 2, we explore how blockchain and trustless verification enable the evolution of AI agents into truly agentic systems.

Part I. Evolution of AI Systems: From Web2 to Web3

- Web2 AI Agent Landscape

- Limitations of Centralized AI

- Decentralized AI Solutions

- Web3 AI Agent Landscape

1. Web2 AI Agent Landscape

Current State of Centralized AI Agents

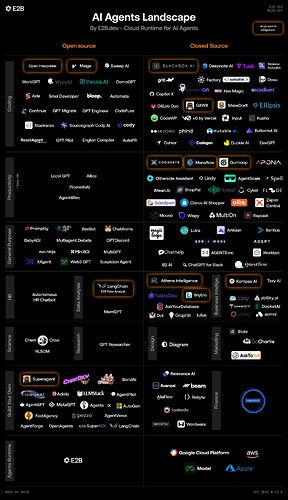

Figure 1. E2B Web2 AI Agent Landscape.

The contemporary AI landscape is predominantly characterized by centralized platforms and services controlled by major technology companies. Companies like OpenAI, Anthropic, Google, and Microsoft provide large language models (LLMs) and maintain crucial cloud infrastructure and API services that power most AI agents.

AI Agent Infrastructure

Recent advancements in AI infrastructure have fundamentally transformed how developers create AI agents. Instead of coding specific interactions, developers can now use natural language to define agent behaviors and goals, leading to more adaptable and sophisticated systems.

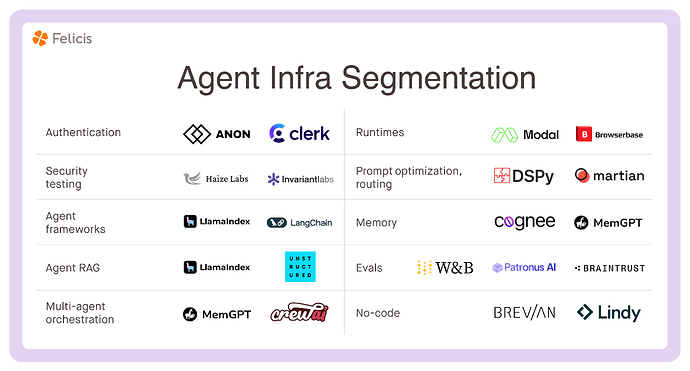

Figure 2. AI Agent Infrastructure Segmentation.

Key advancements in the following areas have led to a proliferation in AI agents:

-

Advanced Large Language Models (LLMs): LLMs have revolutionized how agents understand and generate natural language, replacing rigid rule-based systems with more sophisticated comprehension capabilities. They enable advanced reasoning and planning through “chain-of-thought” reasoning.

Most AI applications are built upon centralized LLM models, such as GPT-4 by OpenAI, Claude by Anthropic, and Gemini by Google.

Open-source AI models include DeepSeek, LLaMa by Meta, PaLM 2 and LaMDA by Google, Mistral 7B by Mistral AI, Grok and Grok-1 by xAI, Vicuna-13B by LM Studio, and Falcon models by Technology Innovation Institute (TII).

-

Agent Frameworks: Several frameworks and tools are emerging to facilitate the creation of multi-agent AI applications for businesses. These frameworks support various LLMs and provide pre-packaged features for agent development, including memory management, custom tools, and external data integration. These frameworks significantly reduce engineering challenges, accelerating growth and innovation.

Top agent frameworks include Phidata, OpenAI Swarm, CrewAI, LangChain LangGraph, LlamaIndex, open-sourced Microsoft Autogen, Vertex AI, and LangFlow, which offer capabilities to build AI assistants with minimal coding required.

-

Agentic AI Platforms: Agentic AI platforms focus on orchestrating multiple AI agents in a distributed environment to solve complex problems autonomously. These systems can adapt dynamically and collaborate, allowing for robust scaling solutions. These services aim to transform how businesses utilize AI by making agent technology accessible and directly applicable to existing systems.

Top agentic AI platforms include Microsoft Autogen, Langchain LangGraph, Microsoft Semantic Kernel, and CrewAI.

-

Retrieval Augmented Generation (RAG): Retrieval Augmented Generation (RAG) allows LLMs to access external databases or documents before responding to queries, enhancing accuracy and reducing hallucinations. RAG advancements enable agents to adapt and learn from new information sources and avoid the need to retrain models.

The top RAG tools are from K2View, Haystack, LangChain, LlamaIndex, RAGatouille, and open-source EmbedChain and InfiniFlow.

-

Memory Systems: To overcome the limitation of traditional AI agents in handling long-term tasks, memory services provide short-term memory for intermediate tasks or long-term memory to store and retrieve information for extended tasks.

Long-term memory includes:

- Episodic Memory. Records specific experiences for learning and problem-solving and is used in context for a present query.

- Semantic Memory. General and high-level information about the agentʻs environment.

- Procedural Memory. Stores the procedures used in decision-making and step-by-step thinking used to solve mathematical problems.

Leaders in memory services include Letta, open-source MemGPT, Zep, and Mem0.

-

No-code AI Platforms: No-code platforms enable users to build AI models through drag-and-drop tools and visual interfaces or a question-and-answer wizard. Users can deploy agents directly to their applications and automate workflows. By simplifying the AI agent workflow, anyone can build and use AI, resulting in greater accessibility, faster development cycles, and increased innovation.

No-code leaders include BuildFire AI, Google Teachable Machine, and Amazon SageMaker.

Several niche no-code platforms exist for AI agents such as Obviously AI for business predictions, Lobe AI for image classification, and Nanonets for document processing.

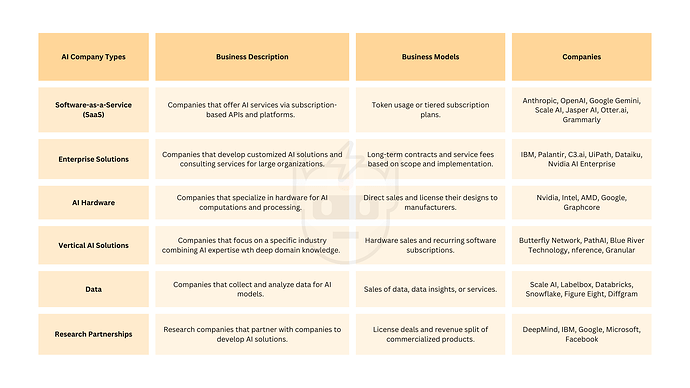

Figure 3. AI Business Models.

Business Models

Traditional Web2 AI companies primarily employ tiered subscriptions and consulting services as their business models.

Emerging business models for AI agents include:

- Subscription / Usage-Based. Users are charged based on the number of agent runs or the computational resources utilized, similar to Large Language Model (LLM) services.

- Marketplace Models. Agent platforms take a percentage of the transactions made on the platform, similar to app store models.

- Enterprise Licensing. Customized agent solutions with implementation and support fees.

- API Access. Agent platforms provide APIs that allow developers to integrate agents into their applications, with charges based on API calls or usage volume.

- Open-Source with Premium Features. Open-source projects offer a basic model for free but charge for advanced features, hosting, or enterprise support.

- Tool Integration. Agent platforms may take a commission from tool providers for API usage or services.

2. Limitations of Centralized AI

While current Web2 AI systems have ushered in a new era of technology and efficiency, they face several challenges.

- Centralized Control: The concentration of AI models and training data in the hands of a few large technology companies creates risks of restricted access, controlled model training, and enforced vertical integrations.

- Data Privacy and Ownership: Users lack control over how their data is used and receive no compensation for its use in training AI systems. Centralization of data also creates a single point of failure and can be a target for data breaches.

- Transparency Issues: The “black box” nature of centralized models prevents users from understanding how decisions are made or verifying the training data sources. Applications built on these models cannot explain potential biases, and users have little to no control over how their data is used.

- Regulatory Challenges: The complex global regulatory landscape concerning AI use and data privacy creates uncertainty and compliance challenges. Agents and applications built on centralized AI models may be subject to regulations from the model owner’s country.

- Adversarial Attacks: AI models can be susceptible to adversarial attacks, where inputs are modified to deceive the model into producing incorrect outputs. Verification of input and output validity is required, along with AI agent security and monitoring.

- Output Reliability: AI model outputs require technical verification and a transparent, auditable process to establish trustworthiness. As AI agents scale, the correctness of AI model outputs becomes crucial.

- Deep Fakes: AI-modified images, speech, and videos, known as “Deep Fakes,” pose significant challenges as they can spread misinformation, create security threats, and erode public trust.

3. Decentralized AI Solutions

The main constraints of Web2 AI—centralization, data ownership, and transparency—are being addressed with blockchain and tokenization. Web3 offers the following solutions:

- Decentralized Computing Networks. Instead of using centralized cloud providers, AI models can utilize distributed computing networks for training and running inference.

- Modular Infrastructure. Smaller teams can leverage decentralized computing networks and data DAOs to train new, specific models. Builders can augment their agents with modular tooling and other composable primitives.

- Transparent and Verifiable Systems. Web3 can offer a verifiable way to track model development and usage with blockchain. Model inputs and outputs can be verified via zero-knowledge proofs (ZKPs) and trusted execution environments (TEEs) and permanently recorded on-chain.

- Data Ownership and Sovereignty. Data can be monetized via marketplaces or data DAOs, which treat data as a collective asset and can redistribute profits from data usage to DAO contributors.

- Network Bootstrapping. ****Token incentives can help bootstrap networks by rewarding early contributors for decentralized computing, data DAOs, and agent marketplaces. Tokens can create immediate economic incentives that help overcome the initial coordination problems that impede network adoption.

4. Web3 AI Agent Landscape

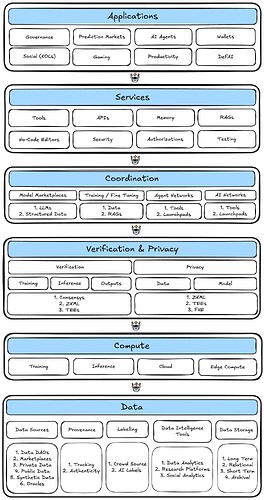

Both Web2 and Web3 AI agent stacks share core components like model and resource coordination, tools and other services, and memory systems for context retention. However, Web3ʻs incorporation of blockchain technologies allows for the decentralization of compute resources, tokens to incentivize data sharing and user ownership, trustless execution via smart contracts, and bootstrapped coordination networks.

Figure 4. Web3 AI Agent Stack.

Data

The Data layer is the foundation of the Web3 AI agent stack and encompasses all aspects of data. It includes data sources, provenance tracking and authenticity verification, labeling systems, data intelligence tools for analytics and research, and storage solutions for different data retention needs.

-

Data Sources. Data Sources represent the various origins of data in the ecosystem.

- Data DAOs. Data DAOs (Vana and Masa AI) are community-run organizations that facilitate data sharing and monetization.

- Marketplaces. Platforms (Ocean Protocol and Sahara AI) create a decentralized market for data exchange.

- Private Data. Social, financial, and healthcare data can be anonymized and brought on-chain for the user to monetize. Kaito AI indexes social data from X and creates sentiment data through their API.

- Public Data. Web2 scraping services (Grass) collect public data and then preprocess it into structured data for AI training.

- Synthetic Data. Public data is limited and synthetic data based on real, public data has proven a suitable alternative for AI model training. Modeʻs Synth Subset is a synthetic price dataset built for AI model training and testing.

- Oracles. Oracles aggregate data from off-chain sources to connect with blockchain via smart contracts. Oracles for AI include Ora Protocol, Chainlink, and Masa AI.

-

Provenance. Data provenance is crucial for ensuring data integrity, bias mitigation, and reproducibility in AI. Data provenance tracks the data’s origin and records its lineage.

Web3 offers several solutions for data provenance, including recording data origins and modifications on-chain via blockchain-based metadata (Ocean Protocol and Filecoinʻs Project Origin), tracing data lineage via decentralized knowledge graphs (OriginTrail), and producing zero-knowledge proofs for data provenance and audits (Fact Fortress, Reclaim Protocol).

-

Labeling. Data labeling has traditionally required humans to tag or label data in for supervised learning models. Token incentives can help crowdsource workers for data preprocessing.

In Web2, Scale AI has an annual revenue of $1 billion and counts OpenAI, Anthropic, and Cohere as customers. In Web3, Human Protocol and Ocean Protocol crowdsource data labeling and reward label contributors with tokens. Alaya AI and Fetch.ai employ AI agents for data labeling.

-

Data Intelligence Tools. Data Intelligence Tools are software solutions that analyze and extract insights from data. They improve data quality, ensure compliance and security, and boost AI model performance by improving data quality.

Blockchain analytics companies include Arkham, Nansen, and Dune. Off-chain research by Messari and social media sentiment analysis by Kaito also have APIs for AI model consumption.

-

Data Storage. Token incentives allow for decentralized, distributed data storage across independent node networks. Data is typically encrypted and shared across multiple nodes to maintain redundancy and privacy.

Filecoin was one of the first distributed data storage projects allowing people to offer their unused hard drive space to store encrypted data in exchange for tokens. IPFS (InterPlanetary File System) creates a peer-to-peer network for storing and sharing data using unique cryptographic hashes. Arweave developed a permanent data storage solution that subsidizes storage costs with block rewards. Storj offers S3-compatible APIs that allow existing applications to switch from cloud storage to decentralized storage easily.

Compute

The Compute layer provides the processing infrastructure needed to run AI operations. Computing resources can be divided into distance categories: training infrastructure for model development, inference systems for model execution and agent operations, and edge computing for local decentralized processing.

Distributed computing resources remove the reliance on centralized cloud networks and enhance security, reduce the single point of failure issue, and allow smaller AI companies to leverage excess computing resources.

-

Training. Training AI models are computationally expensive and intensive. Decentralized training compute democratizes AI development while increasing privacy and security as sensitive data can be processed locally without centralized control.

Bittensor and Golem Network are decentralized marketplaces for AI training resources. Akash Network and Phala provide decentralized computing resources with TEEs. Render Network repurposed its graphic GPU network to provide computing for AI tasks.

-

Inference. Inference computing refers to the resources needed by models to generate a new output or by AI applications and agents to operate. Real-time applications that process large volumes of data or agents that require multiple operations use a larger amounts of inference computing power.

Hyperbolic, Dfinity, and Hyperspace specifically offer inference computing. Inference Labsʻs Omron is an inference and computes verification marketplace on Bittensor. Decentralized computing networks like Bittensor, Golem Network, Akash Network, Phala, and Render Network offer both training and inference computing resources.

-

Edge Compute. Edge computing involves processing data locally on remote devices like smartphones, IoT devices, or local servers. Edge computing allows for real-time data processing and reduced latency since the model and the data run locally on the same machine.

Gradient Network is an edge computing network on Solana. Edge Network, Theta Network, and AIOZ allow for global edge computing.

Verification / Privacy

The Verification and Privacy layer ensures system integrity and data protection. Consensus mechanisms, Zero-Knowledge Proofs (ZKPs), and TEEs are used to verify model training, inference, and outputs. FHE and TEEs are used to ensure data privacy.

-

Verifiable Compute. Verifiable compute includes model training and inference.

Phala and Atoma Network combine TEEs with verifiable compute. Inferium uses a combination of ZKPs and TEEs for verifiable inference.

-

Output Proofs. Output proofs verify that the AI model outputs are genuine and have not been tampered with without revealing the model parameters. Output proofs also offer provenance and are important for trusting AI agent decisions.

zkML and Aztec Network both have ZKP systems that prove computational output integrity. Marlinʻs Oyster provides verifiable AI inference through a network of TEEs.

-

Data and Model Privacy. FHE and other cryptographic techniques allow models to process encrypted data without exposing sensitive information. Data privacy is necessary when handling personal and sensitive information and to preserve anonymity.

Oasis Protocol provides confidential computing via TEEs and data encryption. Partisia Blockchain uses advanced Multi-Party Computation (MPC) to provide AI data privacy.

Coordination

The Coordination layer facilitates interaction between different components of the Web3 AI ecosystem. It includes model marketplaces for distribution, training and fine-tuning infrastructure, and agent networks for inter-agent communication and collaboration.

-

Model Networks. Model networks are designed to share resources for AI model development.

-

LLMs. Large language models require a significant amount of computing and data resources. LLM networks allow developers to deploy specialized models.

Bittensor, Sentient, and Akash Network provide users with computing resources and a marketplace to build LLMs on their networks. -

Structured Data. Structured data networks are dependent on customized, curated data sets.

Pond AI uses graph foundational models to create applications and agents that utilize blockchain data.

-

Marketplaces. Marketplaces help monetize AI models, agents, and datasets.

Ocean Protocol provides a marketplace for data, data preprocessing services, models, and model outputs. Fetch AI is an AI agent marketplace.

-

-

Training / Fine Tuning. Training networks specialize in distributing and managing training datasets. Fine-tuning networks are focused on infrastructure solutions to enhance model external knowledge through RAGs (Retrieval Augmented Generation) and APIs.

Bittensor, Akash Network, and Golem Network offer training and fine-tuning networks.

-

Agent Networks. Agent Networks provide two main services for AI agents: 1) tools and 2) agent launchpads. Tools include connections with other protocols, standardized user interfaces, and communication with external services. Agent launchpads allow for easy AI agent deployment and management.

Theoriq leverages agent swarms to power DeFi trading solutions. Virtuals is the leading AI agent launchpad on Base. Eliza OS was the first open-source LLM model network. Alpaca Network and Olas Network are community-owned AI agent platforms.

Services

The Services layer provides the essential middleware and tooling that AI applications and agents need to function effectively. This layer includes development tools, APIs for external data and application integration, memory systems for agent context retention, Retrieval-Augmented Generation (RAG) for enhanced knowledge access, and testing infrastructure.

-

Tools. A suite of utilities or applications that facilitate various functionalities within AI agents:

-

Payments. Integrating decentralized payment systems enables agents to autonomously conduct financial transactions, ensuring seamless economic interactions within the Web3 ecosystem.

Coinbaseʻs AgentKit allows AI agents to make payments and transfer tokens. LangChain and Payman offer to send and request payment options for agents.

-

Launchpads. Platforms that assist in deploying and scaling AI agents, providing resources such as token launches, model selection, APIs, and tool access.

Virtuals Protocol is the leading AI agent launchpad allowing users to create, deploy, and monetize AI agents. Top Hat and Griffain are AI agent launchpads on Solana.

-

Authorization. Mechanisms that manage permissions and access control, ensuring agents operate within defined boundaries and maintain security protocols.

Biconomy offers Session Keys for agents to ensure that agents can only interact with whitelisted smart contracts.

-

Security. Implementing robust security measures to protect agents from threats, ensuring data integrity, confidentiality, and resilience against attacks.

GoPlus Security added a plug-in that allows ElizaOS AI agents to utilize on-chain security features that prevent scamming, phishing, and suspicious transactions across multiple blockchains.

-

-

Application Programming Interfaces (APIs). APIs facilitate the seamless integration of external data and services into AI agents. Data access APIs provide agents with access to real-time data from external sources, enhancing their decision-making capabilities. Service APIs allow agents to interact with external applications and services expanding their functionality and reach.

Datai Network provides blockchain data to AI agents through a structured data API. SubQuery Network offers decentralized data indexers and RPC endpoints for AI agents and applications.

-

Retrieval-Augmented Generation (RAG) Augmentation. RAG augmentation enhances agentsʻ knowledge access by combining LLMs with external data retrieval.

- Dynamic Information Retrieval. Agents can fetch up-to-date information from external databases or the internet to provide accurate and current responses.

- Knowledge Integration. Integrating retrieved data into the generation process allows agents to produce more informed and contextually relevant outputs.

Atoma Network offers secure data curation and public data APIs for customized RAGs. ElizaOS and KIP Protocol offer agent plugins to external data sources like X and Farcaster.

-

Memory. AI agents require a memory system to retain context and to learn from their interactions. With context retention, agents maintain a history of interactions to provide coherent and contextually appropriate responses. Longer memory storage allows agents to store and analyze past interactions which can improve their performance and personalize user experiences over time.

ElizaOS offers memory management as part of its agent network. Mem0AI and Unibase AI are building a memory layer for AI applications and agents.

-

Testing Infrastructure. Platforms that are designed to ensure the reliability and robustness of AI agents. Agents can run in controlled simulation environments to evaluate performance under various scenarios. Testing platforms allow for performance monitoring and continuous assessment of agentsʻ operations to identify any problems.

Alchemyʻs AI assistant, ChatWeb3, can test AI agents through complex queries and tests on function implementations.

Applications

The Application layer sits at the top of the AI stack and represents the end-user-facing solutions. This includes agents that solve use cases like wallet management, security, productivity, gaining, prediction markets, governance systems, and DeFAI tools.

-

Wallets. AI agents enhance Web3 wallets by interpreting user intents and automating complex transactions, thereby improving user experience.

Armor Wallet and FoxWallet utilize AI agents to execute user intents across DeFi platforms and blockchains allowing users to input their intents via a chat-style interface. Coinbaseʻs Developer Platform offers AI agents MPC wallets enabling them to transfer tokens autonomously.

-

Security. AI agents monitor blockchain activity to identify fraudulent behavior and suspicious smart contract transactions.

ChainAware.aiʻs Fraud Detector Agent provides real-time wallet security and compliance monitoring across multiple blockchains. AgentLayerʻs Wallet Checker scans wallets for vulnerabilities and offers recommendations to enhance security.

-

Productivity. AI agents assist in automating tasks, managing schedules, and providing intelligent recommendations to boost user efficiency.

World3 features a no-code platform to design modular AI agents for tasks like social media management, Web3 token launches, and research assistance.

-

Gaming. AI agents operate non-player characters (NPCs) that adapt to player actions in real-time, enhancing user experience. They can also generate in-game content and assist new players in learning the game.

AI Arena uses human players and imitation learning to train AI gaming agents. Nim Network is an AI gaming chain that provides agent IDs and ZKPs to verify agents across blockchains and games. Game3s.GG designs agents capable of navigating, coaching, and playing alongside human players.

-

Prediction. AI agents analyze data to provide insights and facilitate informed decision-making for prediction platforms.

GOATs Predictor is an AI agent on the Ton Network that offers data-driven recommendations. SynStation is a community-owned prediction market on Soneium that employs AI Agents to assist users in making decisions.

-

Governance. AI agents facilitate decentralized autonomous organization (DAO) governance by automating proposal evaluations, conducting community temperature checks, ensuring Sybil-free voting, and implementing policies.

SyncAI Network features an AI agent acting as a decentralized representative for Cardanoʻs governance system. Olas offers a governance agent that drafts proposals, votes, and manages a DAO treasury. ElizaOS has an agent that gathers data insights from DAO forum and Discord, providing governance recommendations.

-

DeFAI Agents. Agents can swap tokens, identify yield-generating strategies, execute trading strategies, and manage cross-chain rebalancing. Risk manager agents monitor on-chain activity to detect suspicious behavior and withdraw liquidity if necessary.

Theoriqʻs AI Agent Protocol deploys swarms of agents to manage complex DeFi transactions, optimize liquidity pools, and automate yield farming strategies. Noya is a DeFi platform that leverages AI agents for risk and portfolio management.

Collectively, these applications contribute to secure, transparent, and decentralized AI ecosystems tailored to Web3 needs.

Conclusion

The evolution from Web2 to Web3 AI systems represents a fundamental shift in how we approach artificial intelligence development and deployment. While Web2’s centralized AI infrastructure has driven tremendous innovation, it faces significant challenges around data privacy, transparency, and centralized control. The Web3 AI stack demonstrates how decentralized systems can address these limitations through data DAOs, decentralized computing networks, and trustless verification systems. Perhaps most importantly, token incentives are creating new coordination mechanisms that can help bootstrap and sustain these decentralized networks.

Looking ahead, the rise of AI agents represents the next frontier in this evolution. As we’ll explore in the next article, AI agents – from simple task-specific bots to complex autonomous systems – are becoming increasingly sophisticated and capable. The integration of these agents with Web3 infrastructure, combined with careful consideration of technical architecture, economic incentives, and governance structures, has the potential to create more equitable, transparent, and efficient systems than what was possible in the Web2 era. Understanding how these agents work, their different levels of complexity, and the distinction between AI agents and truly agentic AI will be crucial for anyone working at the intersection of AI and Web3.

Resources

- Amos G. Best 5 Frameworks to Build Multi-Agent AI Applications. November 25, 2024.

- Lekha Priya. Top 5 Agentic AI Frameworks to Watch in 2025. January 9, 2025.

- LangChain. State of AI Agents. 2024.

- Roi Lipman. AI Agents: Memory Systems and Graph Database Integration. November 6, 2024.

- James Detweiler and Eric Flaningam. The Agentic Web. August 14, 2024.

- Wojciech Filipek. Top No-Code AI Tools of 2025: In-Depth Guide. December 31, 2024.

- Skanda Vivek. The Economics of Large Language Models. August 9, 2023.

- Teng Yan, Chain of Thought. The Ultimate Crypto AI Primer. July 18, 2024.

- Jonathan King, Coinbase Ventures. Demystifying the Crypto x AI Stack. October 24, 2024

- 0xJeff. My Data is not Mine. The Emergence of Data Layers.

- Madhavan Malolan, Reclaim Protocol. Proof of Provenance. December 8, 2023.