Creating a decentralized service comes with some non-trivial choices and tradeoffs. This post intends to show a perspective on the topic of provisioning decentralized services.

Application Governance: who decides what about the application? Who, and how, controls the release cycle for the application? Who controls configurations, allowed operators?

Configuration Availability: how, and where from, are instances of an application receiving their configuration and secrets? How does availability of secrets and configuration affect the application?

Service Discovery: how to discover and authenticate applications, instances, peers?

The overall problem I’ll be calling TEE service provisioning: the process by which a freshly deployed confidential virtual machine becomes a live instance of an application. Why provisioning decentralized services is an unsolved problem is that all of the currently available provisioning tools (ansible, terraform, consul, kubernetes) assume central, trusted operators and administrators. We can’t allow unchecked admin privilege in our decentralized systems!

With decentralization, as with security, the system is only as decentralized as its least decentralized part. If configuration or secrets availability rests on a centralized server, then the system as a whole is centralized as a result — and the same applies equally to governance and service discovery.

Requirements

Tailored decentralization: It’s important to keep decentralization properties at corresponding levels. Forcing service provisioning that has wildly different decentralization properties from the service itself will be inefficient and will create friction — whether it’s much more or much less decentralized.

This applies especially to the governance model — who controls what and how. The governance model should be consistent throughout the application, provisioning included.

This means that both data availability and service discovery should not assume or require specific decentralization properties, and should simply be fit to measure.

Sometimes, transparency is all that is needed. Other times, everything should be a DAO vote.

Application-agnostic: Provisioning system should be able to serve any number of applications with no additional effort. This is crucial as our current tools simply do not allow us to scale.

Disaster recovery: Provisioning system, as well as provisioned systems, must be able to withstand bugs, outages and faults. While the specific disaster recovery properties will be dictated by the application’s requirements, provisioning system itself should not negatively affect ability to recover from a disaster.

Guidelines

More malleable than requirements, guidelines are the product of our experience running TEE services for some time now.

Onchain Governance: The only real way to perform governance in a decentralized, or even properly transparent way. While we want to tailor decentralization guarantees to apps — they are free to pick their governance model — all apps must be governed onchain.

TLS-First: ATLS is not portable enough, requiring both client and server to implement the custom protocol. This in particular means we can’t readily use most standard tooling like curl or nginx. Performing attestation out of band and relying on regular tls certificates in all hot paths is much preferred for performance, ease of use, and developer experience.

Keep public IPs in DNS: Similar to the above point, we don’t have to reinvent the wheel when it comes to discovering public endpoints for applications: just keep them in DNS records. This, maybe counterintuitively, does not have any negative impact on liveness or decentralization properties in practice, as IPs and instances are anyway fully controlled by respective infrastructure operators.

Service discovery, not network topology: Do not assume a specific network topology, since we will not find a good enough fit for our applications. Instead, allow service and public endpoint discovery for bootstrapping any network topology the application needs. If IPs have to be kept confidential, either put them encrypted into DNS, or put TEE-aware bootnodes in DNS and use those to discover peers. Note that IPs can point to external L4 load balancers rather than instances themselves, but you can’t easily deploy L7 load balancers outside of the TEE VMs.

Multiple, content-addressed, data availability backends: While onchain governance is one-size-fits-all, the same cannot be said of any data availability (storage) backend. Some applications will prefer something like onchain L2 storage, while some others would much prefer github or S3, or even vault, for their DA. This comes down to a fundamental cost vs availability vs security tradeoff, especially when it comes to secrets, and we cannot pick one solution over the others without knowing the application’s specific needs.

The big benefit of using content addressing is that we decouple data from any specific storage, which means we can mirror all the data as necessary to avoid both lock-in and bad liveness.

Proposed Solution

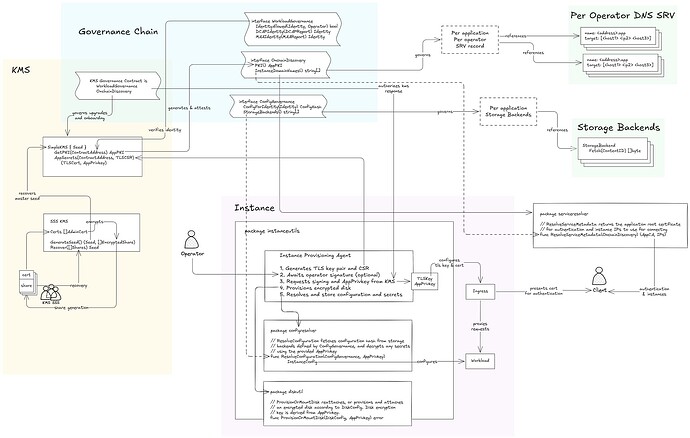

Here I provide an early but comprehensive and practical framework for decentralized provisioning of TEE services. It hopefully addresses the key challenges of application governance, configuration and secrets availability, service discovery and authentication.

If you are interested in more detail than I go into in this post, see the implementation prototype and its documentation.

Architecture diagram

Standard onchain TEE service governance interface

Provides transparent, immutable governance for TEE identity verification. Implemented as a mapping of TEE report data to workload identities (currently hashes of the various report fields). This lets us simplify versioning and maintenance by having a simple handle for all instances running the same workload, and which always changes on upgrades. Workload identities are then allowlisted, which could optionally include infrastructure operator authorization.

Keeping the mapping of reports to workloads and allowlist separate lets us reuse the same interfaces and implementation regardless of which parts of the measurement are important, and how they are structured. This is specifically necessary to parse runtime extensions to measurement, as well as measured configuration fields.

Instances should also include TEE report data in a way that allows end users to validate the report as well as governance authorization themselves, separately from any offchain components. In the current implementation this is done through embedding the attestation report in instance’s TLS certificate.

Contract interface

interface WorkloadGovernance {

// Allowed workload identities

function IdentityAllowed(bytes32 identity, address operator /* optional for either authentication or authorization */) external view returns (bool);

// Identity computation from attestation reports

function DCAPIdentity(DCAPReport memory report, DCAPEvent[] memory eventLog) external view returns (bytes32);

function MAAIdentity(MAAReport memory report) external view returns (bytes32);

}

Golang client interface

interface WorkloadGovernance {

// IdentityAllowed checks whether provided identity is allowed

// by workload governance contract. Also provides operator address,

// which the governance contract optionally checks (for either just authentication

// or both authentication and authorization).

IdentityAllowed(identity [32]byte, operator [20]byte)

(allowed bool, err error)

}

// MeasurementsFromATLS is a helper function, extracting attestation data

// from cvm-reverse-proxy validated attestation headers

func MeasurementsFromATLS(req *http.Request)

(attestationType AttestationType, measurements map[int]string, err error)

// VerifyDCAPAttestation is a helper function, verifying raw attestation

// report for any data attested that does not go through attested connections,

// for example if it's stored on chain.

func VerifyDCAPAttestation(reportData [64]byte, report []byte)

(measurements map[int]string, err error)

// Similar helper function for MAA

Note: endorsements are assumed to be validated offchain. This doesn’t have to be the case, and for some products it might make sense to move DCAP attestation validation onchain, including endorsements.

Note: using a simple hash of report’s fields might not be sufficient for all applications, specifically if attestation verification is onchain and is deferred (ie stored first, without validating). In that case we might have to introduce an intermediate structure that reports map to that contains more data than just the hash, for example tcb svn. The same interface will work in this case, but the implementation will be more involved.

Content-Addressed Configuration and Secrets Framework

Decouples data from specific storage providers using content hashing, supports multiple storage backends with unified interface. If governance of configurations is needed it should happen through the same onchain governance contracts as workload allowlist. Application-wide secrets are either derived from TEE KMS, or encrypted to application-wide onchain attested pubkey.

Multiple config data availability backends are implemented, letting applications reduce their liveness assumptions of external systems. Onchain configuration store is ideal if blockspace is cheap enough, however using Ethereum’s L1 could prove prohibitively expensive.

Note that rotation of this key is not yet done.

Contract interface

interface ConfigGovernance {

function ConfigForIdentity(bytes32 identity, address operator) external view returns (bytes32);

function StorageBackends() external view returns (string[] memory);

}

Golang client interface

package configresolver

// ResolveConfiguration uses provided data availability backends (storageFactor)

// to resolve the configuration pointed to by provisioning governance contract,

// and decrypts any secrets using the provided app private key

func ResolveConfiguration(

configGovernance interfaces.ConfigGovernance,

storageFactory interfaces.StorageBackendFactory,

configHash [32]byte,

contractAddr interfaces.ContractAddress,

appPrivkey interfaces.AppPrivkey

) (interfaces.InstanceConfig, error) { /* ... */ }

DNS Service Discovery with TLS Authentication

Utilizes standard DNS for instance discovery and addressing, coupled with application-specific onchain-governed certificate authority. Enables secure and developer-friendly communication with standard TLS, in any network topology the application requires. Each application can have an offchain in-TEE TLS root certificate authority, with the public CA certificate governed onchain for ease of use. Depending on the trust model, an application might also separately present a TLS certificate signed by an ordinary CA, say Let’s Encrypt. In this case the certificate can’t contain the attestation, so if a non-TEE KMS CA is used an attestation of the certificate should be available elsewhere for cross-checking (say in DNS or onchain). Also see zero-trust https.

One notable alternative that should be considered is keeping attested TLS pubkeys in an onchain registry. Just keeping the reports even on L1 Ethereum is not overly expensive, but verification of reports is currently quite expensive (500k gas for the zk proof of dcap). There are options still here to explore if there are good reasons to prefer onchain rather than offchain management of instances’ TLS certs rather than offchain CA.

TLS is chosen over aTLS for its compatibility with existing tooling, and lower handshake overhead. However, similarly to aTLS, the TLS certificate contains the attestation report allowing users to authenticate and authorize the instance separately from trusting the CA.

DNS is chosen over onchain registry of IPs as it is much more infrastructure operator friendly, with the security guarantees being basically the same. Since the operator anyway controls the network interface, they can re-point an onchain IP to a malicious instance just as easily as they would re-point a DNS entry, and it includes also simply shutting down the instance in question. DNS can be hijacked, but so can be an IP (if with some more trouble). In the case of a DNS outage, newly connecting clients will have bigger things to worry about anyway (and it’s extremely unlikely).

Contract interface

struct AppPKI {

bytes CA;

bytes appEncryptionPubkey;

bytes PKIattestation;

}

interface OnchainDiscovery {

function PKI() external view returns (AppPKI memory);

function InstanceDomainNames() external view returns (string[] memory);

}

Golang client interface

package serviceresolver

// ResolveServiceMetadata returns the application metadata necessary

// to connect to, authenticate, and authorize peers in any tooling

// which supports TLS

func ResolveServiceMetadata(

discoveryContract interfaces.OnchainDiscovery

) (appCA []byte, IPs []string, err error) { /* ... */}

Onchain-governed TEE Key Management Service

Manages application-wide cryptographic materials, specifically application-wide certificate authority, and application secrets asymmetric encryption keys. The governance of KMS is done through the same interface as for other applications.

Comes with two specific features:

- Optional Shamir’s Secret Sharing t-of-n recovery. Note that ability to recover the master secret is a fundamental trade-off between liveness and security against collusion. Without recovery, if all instances turn off the master secret is not recoverable — but with recovery, admins can combine their shares out of band and possibly gain access to application secrets.

- Optional onchain-driven onboarding using an already bootstrapped instance. This lets KMS operators improve resilience, and is the only way to replicate non-Shamir’s secret shared master secrets.

The implementation includes all the tooling necessary to bootstrap — infrastructure operator utilities and Shamir’s Secret Sharing admin client.

Golang interface

// KMS handles cryptographic operations for TEE applications.

type KMS interface {

// GetPKI retrieves application CA certificate, public key, and attestation.

GetPKI(ContractAddress) (AppPKI, error)

// AppSecrets provides all cryptographic materials for a TEE instance.

AppSecrets(ContractAddress, TLSCSR) (*AppSecrets, error)

}

Instance provisioning utilities

The instanceutils package provides a comprehensive set of tools and utilities for TEE instance management throughout its lifecycle. These components enable bootstrapping, configuration, and authenticated communication.

Configuration Resolver

- Fetches configuration templates from registered storage backends

- Resolves references to other configuration items

- Decrypts embedded secrets using application private key

- Processes templates into final instance configuration

// ResolveConfiguration retrieves configuration and decrypts secrets

func ResolveConfiguration(ConfigGovernance, StorageBackendFactory, AppPrivkey)

(InstanceConfig, error)

Service Resolver

- Discovers application instances using onchain domain registrations

- Resolves domains to IP addresses via standard DNS

- Retrieves PKI information for authenticated communication

// ServiceMetadata contains discovery information for secure connections

type ServiceMetadata struct {

PKI interfaces.AppPKI

IPs []string

}

// ResolveServiceMetadata retrieves instance metadata for peer discovery

func ResolveServiceMetadata(discoveryContract interfaces.OnchainDiscovery)

(*ServiceMetadata, error) {

// Get PKI and domain names, resolve IPs, etc.

}

Encrypted disk management utility

- Manages encrypted persistent storage using LUKS

- Derives encryption keys from application credentials

- Handles disk provisioning, mounting and configuration

package diskutil

type DiskConfig struct {

DevicePath string

MountPoint string

MapperName string

MapperDevice string

}

// ProvisionOrMountDisk reattaches, or provisions and attaches

// an encrypted disk according to DiskConfig. Disk encryption

// key is derived from AppPrivkey.

func ProvisionOrMountDisk(DiskConfig, AppPrivkey) error

Autoprovisioning sample

Simple automated TEE instance provisioning utility:

- KMS-derived disk encryption

- TLS Certificate management for authenticated communication

- Configuration retrieval

- Operator authentication

// Provisioner handles the TEE instance bootstrapping process

type Provisioner struct {

AppContract interfaces.ContractAddress

DiskConfig DiskConfig

ConfigFilePath string

TLSCertPath string

TLSKeyPath string

// Additional fields...

}

// Do performs the provisioning process

func (p *Provisioner) Do() error {

// Generate CSR, register with KMS, configure encrypted storage, etc.

}

Request for feedback

With the ideas still in flux, feedback is greatly appreciated and impactful!

Do you see any aspect of provisioning services not taken into account?

Do the guidelines and solutions surprise you in any way?

Do you see any aspect of this framework to not have a clear route to match any application’s decentralization requirements?

Would you like to see any of the issues or solutions expanded on?

Are you solving a similar problem — and if so, how? Did you arrive at different conclusions?

Thanks!